1 Year of ChatGPT - The Investor’s Rollercoaster Depicted

A Ride Through the Ups and Downs of AI Investing Trends and Investor Sentiment Over the Past 12 Months

Exactly one year ago, on November 30 2022, Open AI released the initial version of ChatGPT to the public. Looking back at the last 12 months, it is incredible what has happened in AI throughout all aspects of the value chain. Let’s use this 1-year anniversary today to look back at what this event did to the AI startup ecosystem in Europe, where sentiment stands at today, and what might come next.

The perfect AI storm

To many, especially if one had little touchpoints with AI beforehand, the launch of ChatGPT seemed like the Cambrian explosion of AI. For the first time, everyone could experience the potency of large language models and immediately have a WOW effect.

If we look under the hood, however, then ChatGPT was just the match to the powder barrel of tectonic technology shifts over the past 70+ years coming together.

The AI value chain

To better portray the individual happenings, I want to first segment the AI value chain into four layers. The infrastructure layer, consisting of semiconductor manufacturers and the hyper scalers using these to offer compute, the intelligence layer, around foundation model providers that build and train large models across different modalities and make these accessible (via closed API or open source), to the middle layer, offering developer tooling to make it easier to build on the before mentioned models, all the way to the application layer building ready-to-consume enterprise or consumer products.

Infrastructure layer

While it has definitely always been an enabling technology for AI, the compute infrastructure layer was for long not on the center stage of discussion and VC interest (some exceptions e.g. Graphcore). With the large rise in size and complexity of foundation models, and the widespread deployment (and thus inference) of it, infrastructure quickly became a real bottleneck though. This is why not only the very dominating incumbents (especially Nvidia) are now gearing up and launching new purposefully built hardware (e.g. the H200), but more start-ups are also bringing new concepts to the table to solve the issue, e.g. in-memory compute.

Foundation Model layer

To many the most interesting part of the value chain, the dominant players of the foundation model layer have been active for years already. OpenAI for example was founded in 2015, and European counterpart AlephAlpha, where Earlybird led the Series A in 2021, was founded in 2019, just like the publisher of Stable Diffusion models, Stability AI. Anthropic (started by former OpenAI employees) as a late starter, was founded in 2021, but still prior to the landmark ChatGPT launch.

With these players, many of which had already launched several generations of models, one might have thought that the segment was already rather saturated, also given the high entry hurdles arising from limited talent in the space and the high capital requirements to train the models, that create certain winner-takes-most dynamics.

Nevertheless, we still saw new broad and generalistic foundation model companies being started (e.g. Mistral Ai in early 2023). What is more, we did see the start of many more specialized foundation models, either for particular other modalities (e.g. Music, molecule structures) or for specific languages (e.g. Spanish), as examples.

While today it is mostly yet to be seen how the power dynamics will eventually play out, there is a case to be made for just a handful of foundation model players to capture the lion’s share of the value across 80% of modalities, use-cases, etc., leaving a bit of room for more specialized (maybe smaller and more efficient?) other model provider - the one’s we saw being started over the last twelve months or new ones to come.

(Sidenote: here I want to re-emphasize that this is the early-stage lens as there are still some insane growth rounds happening in the segment atm)

Middle Layer

The LLM DevTooling layer, positioned between the foundation model layer and the application layer, comprises tools made for developers to better and more efficiently build on and interact with foundation models.

This was maybe the most interesting segment of all over the last twelve months from the early stage investing lens. It basically did not exist one year ago. Still, it managed to attract many extremely talented founders to start building in the space, many of whom graduated from some of the prominent foundation model companies and thus know very well how to work with them. For the first half of 2023, companies from this bucket dominated the early-stage fundraising scene to a large extent.

It is hard to specifically point out the point in time when we reached the tipping point, but looking at it today investor sentiment towards this category seems to have shifted. The problem is that the market grew so crowded quickly, with many companies initially tackling different parts of the tooling landscape (e.g. prompt engineering vs. model orchestration vs. model observability vs. fine-tuning and/or RAG and many more), with most pitching a similar mid- to long-term roadmap and vision. While market potential sure is large, it will most likely not be large enough to support the large quantity of approaches we see now, given that many dominant players from other parts of the stack also push into it and follow a more full-stack approach, and many companies with similar offerings for the traditional ML/data stack tweaking and expanding their offering to also cover LLM use cases.

This is not to say I am not bullish on the segment anymore or to discourage anyone. This is more aimed as a gentle nudge to think about how one can fit into this stack of a handful of relevant and broad tooling platforms and what one’s right to win the segment is.

Application layer

The top of the AI value chain, the application layer, leveraging the capabilities that the bottom layers of the stack enable, to bring great experiences to end users, businesses, and consumers, is the most tangible and visible of all.

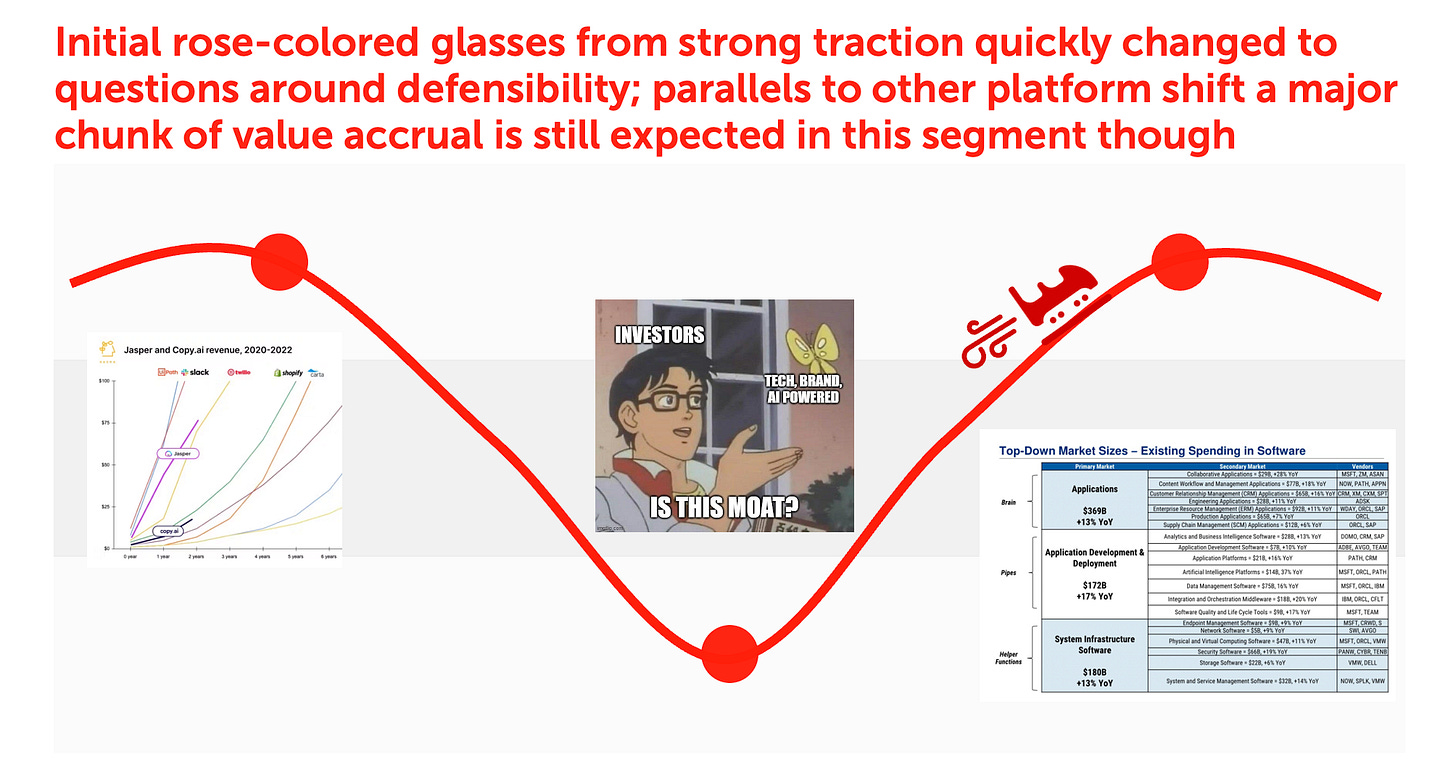

Over the last twelve months, we’ve seen many daily white-collar business tasks being automated by AI applications. Examples are listed below. While early versions of some of the more prominent applications today, like Copilot or Jasper, were available earlier, the density of applications drastically increased post-ChatGPT.

Many early hero use cases managed to gain incredible traction very early on, sparking an initial hype around the application segment. With the middle layer emerging, making it far easier to replicate applications based on the same available underlying foundation models, the big question around “defensibility”, creating strong moats around a product that protect it from competition now but especially in the long-term, was raised, and with it, investor appetite for the segment declined for a while.

The current view, it seems, is more balanced, even optimistic, again. The success of many individual cases is early proof that there is value to be captured in this layer, as founders make compelling cases for moat potentials through e.g. proprietary data or data flywheels or RLHF, and thus create products that are significantly better than alternatives.

Looking at the time lag between previous platform shifts, e.g. mobile, and the emergence of some of the winner applications, is also comforting as it suggests it might still take some time and a maturing ecosystem before the winners of this wave are crowned.

Where do we go from here?

Making predictions in a space as dynamic as AI is hard - but it’s also fun. There are some expectations that feel natural to me (though we might well need to iterate on them over the next year again):

We’ll see a similar roller coaster throughout adjacent segments, such as autonomous agents (models (e.g. action models à la adept), DevTooling, especially frameworks (e.g. AutoGPT), applications (Qevlar & Co), to adjacent infrastructure like distribution channels)

Resolution of the compute bottle-neck: more compute-efficient models meeting more capable purpose-built hardware

Convergence of foundation models: a handful of large providers capturing most of the value

Thinning out of the middle layer: a selected few companies offering very holistic E2E tooling suites for engineers to build on foundation models

The emergence of strong and defensible application companies: teams finding the way to unfair advantages through access to data or other

I am excited about what the year ahead will bring for founders, operators, and investors in AI. Are you, too? Let’s take a seat on the AI rollercoaster and enjoy a second ride.