The Myth of Proprietary Data

Why Many Proprietary Data Claims Don’t Hold Up Under Pressure

Every AI startup pitch includes a “data moat”. Few, in comparison, possess one.

Investors continue to cling to the idea of proprietary data as the ultimate defense. In 2025, this belief increasingly feels like a holdover from a previous technological era. Once upon a time, proprietary data stood as a near-impenetrable fortress. Think of Google’s search logs or Facebook’s social graphs. These vast, unique datasets offered early internet giants decisive advantages. Data was scarce. Collecting it was hard. Moats were real.

But the dynamics have shifted. Foundation models, trained on broad public datasets, have fundamentally altered the landscape. These models are capable of generalizing across tasks that once required bespoke datasets. Owning a small, unique pool of data no longer guarantees leverage. The rise of open datasets and synthetic generation methods further weakens data’s scarcity. Specialized data vendors like Scale AI (industrializing data labeling at volume) or Surge AI (serving top AI labs with high-quality, feedback-optimized annotations) also accelerate this shift, turning data into a commodity for some and a premium service for others. If data is abundant or accessible on demand, it ceases to be defensible.

Even proprietary user interaction data - often cited as a strategic edge - tends to be limited. Such data is typically narrow, noisy, and difficult to translate into meaningful performance improvements. Increasingly, the question is not who owns the data, but who uses it most effectively. Raw data itself rarely confers advantage. The infrastructure to clean, process, and extract insights from that data may matter far more. In this sense, the moat is not the asset but the ability to operationalize it.

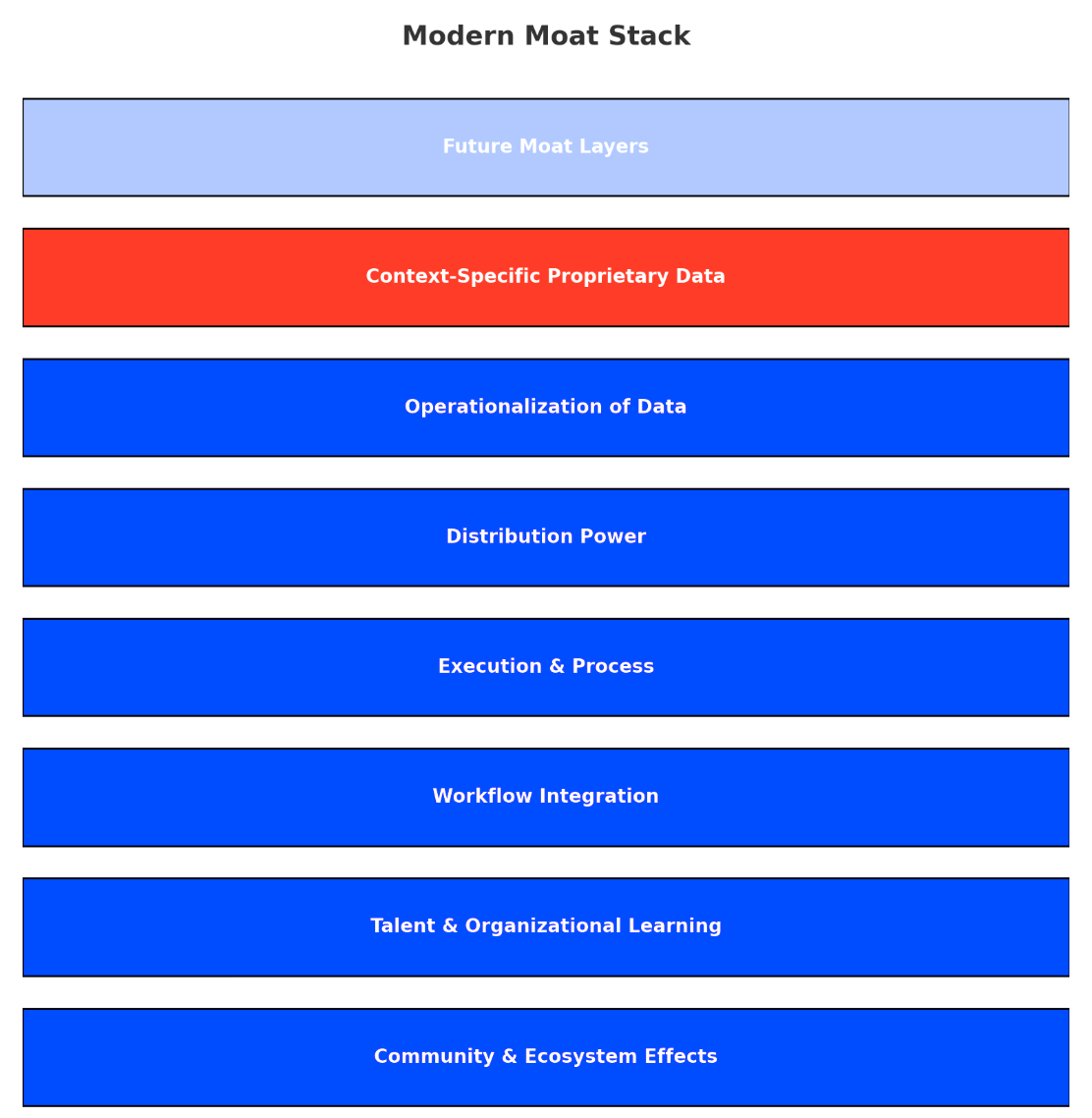

If proprietary data no longer serves as a guaranteed defense, what does? Perhaps moats today are more fluid, built from a combination of factors rather than a single source. Distribution advantages deserve closer attention. In an AI-driven world, ownership of customer access and control over key distribution channels may create more defensibility than proprietary datasets themselves. Even technically weaker solutions can dominate when they embed directly within customer ecosystems or leverage established distribution networks. For AI companies especially, integrating outputs seamlessly into existing platforms - be it through APIs, email, or collaboration tools - can establish durable distribution moats that are difficult to displace. Execution and process are frequently cited. The capacity to iterate rapidly, retrain models, and own robust deployment pipelines may confer a form of defensibility rooted in speed and adaptability. Embedding models deeply into customer workflows can make replacements costly and complex, offering another layer of protection.

Control over critical workflows, particularly in B2B or developer-focused contexts, can similarly create stickiness. The more tightly a solution integrates into mission-critical processes, the harder it becomes to displace. Talent, too, may serve as an overlooked moat. Organizations capable of attracting specialized expertise and operationalizing continuous learning can maintain competitive momentum, regardless of the exclusivity of their datasets. In some cases, building communities and ecosystems around a product shifts defensibility from proprietary data to network effects. When external developers or users amplify a product’s reach and functionality, the moat is no longer static but self-reinforcing.

That said, proprietary data still retains strategic value in specific contexts. Highly regulated industries such as healthcare, finance, and insurance may still depend on sensitive datasets that remain scarce and protected. Domain-specific models trained on vertical datasets, such as legal documents or technical manuals, may outperform generalist models and retain defensibility. Physical world data generated by robotics or autonomous vehicles remains difficult to replicate, offering another exception. Long-standing incumbents, from credit bureaus to industrial firms, may retain proprietary datasets accumulated over decades, preserving historical advantages that new entrants struggle to overcome.

A potential recipe to make proprietary data defensible might start with ensuring true scarcity: collecting or generating data that others cannot easily access or replicate. But scarcity alone is insufficient. Success likely depends on pairing unique datasets with tailored, domain-specific model architectures and robust data infrastructure capable of turning raw information into actionable insights. Finally, continuously feeding product usage and feedback data back into retraining pipelines can help build a self-reinforcing loop that strengthens defensibility over time.

So why does the myth of the data moat persist? Part of the answer is simplicity. The idea of a “data moat” fits neatly into pitch decks and investment memos. It offers cognitive comfort to investors seeking clear defensibility. Founders, understanding this dynamic, emphasize proprietary data narratives in their pitches. And investors, wary of backing thin wrappers on existing models, want to believe that hidden depth lies somewhere beneath the surface.

Yet in the foundation model era, defensibility increasingly looks less like a database and more like an operating system. It is built through execution, integration, organizational learning, and ecosystem development. Investors and founders alike may need to shift their lens, from who owns the data to who controls the process, the customer relationship, and the capacity for ongoing adaptation.

The proprietary data moat may not be dead. But for most, it is likely a mirage.